This article includes contributions from Quyen Tu.

How Well Can You Spot AI?

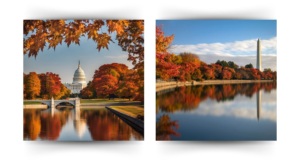

Below are two images, one is of a real photo and the other is AI generated. Can you guess which is the real photo? We’ll reveal the answer at the end of the blog!

Introduction to Artificial Intelligence

In January of 2024, a robocall went around New Hampshire purporting to be President Joe Biden. “Your vote makes a difference in November, not this Tuesday,” the voice said, attempting to dissuade early voters from turning out at the polls. Authorities deduced that this fraudulent message was produced by artificial intelligence (“AI”). As AI evolves, it is likely that these manipulative incidents will occur more frequently, and improving the public knowledge of AI should be of especially high priority in this contentious election year. Going forward, it is increasingly important to safeguard our electoral system from AI abuses like the one in New Hampshire.

The first successful AI computer program was developed in 1952 to play checkers. Since then, AI has steadily seeped into our daily lives. Today, we use narrow AI when performing specific and limited tasks, like when we ask Siri or Alexa to call our mom or run the robot vacuum. Generative AI, on the other hand, can produce novel media and outputs. ChatGPT is a popular example. These applications of AI are only scratching the surface of its vast potential.

Unfortunately, generative AI is already being exploited for malicious purposes. For instance, AI can impersonate others through writing, quickly producing letters, emails, and personalized messages. This was evident in the New Hampshire robocalls, where AI used previous speech samples to create new audio clips. Additionally, AI can generate images and videos, known as “deepfakes,” which depict an individual saying or doing something they never actually did. Since the quality of deepfakes is constantly improving, they are increasingly harder to detect.

Relying on AI for information raises several ethical questions. AI systems can produce absurd and erroneous responses, a phenomenon known as “hallucinating.” Moreover, in a concerning discovery, AI systems have been shown to reflect human biases, such as racism, sexism, and more. While AI has many helpful uses, it is crucial to be cognizant of the problematic outputs it can generate.

Artificial Intelligence and Elections

AI is already being used to assault our democracy. These underhanded plots can have widespread consequences. For example, cyber-attacks, such as phishing schemes, are used to gain access to sensitive data and voting infrastructure and their efficacy and scale increases with the use of AI. These hacks can originate from foreign or domestic sources. Manipulative techniques are also implemented to further voter suppression, particularly by targeting disinformation at those who already face systemic obstacles at the polls and voters in battleground states. Additionally, there is a serious risk of misinformation being disseminated at state and local levels due to a lack of attention on smaller elections, steep declines in local news outlets, and insufficient resources to identify and combat these attacks.

During an election year, bad actors can leverage AI to create and spread misinformation and chaos. For instance, deepfakes of candidates making controversial statements can dramatically impact campaigns. The problem is exacerbated by the “liar’s dividend,” when voters incorrectly assume legitimate media to be fake due to the difficulty in distinguishing real from fake. A candidate could then allege that damaging recordings or images are AI-generated to escape the consequences for their actions. As AI technology improves, it will be increasingly difficult for voters to discern fact from fiction.

While some progress has been made, much work remains to manage AI effectively. In 2023, President Biden issued an Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence, urging federal agencies to regulate AI within their spheres of government. The Federal Communications Commission has taken some steps by proposing a plan to require politicians to disclose the use of AI in advertisements. The Federal Election Commission is similarly considering actions to restrict candidates from using AI to misrepresent opponents. A comprehensive solution could be modeled after the European Union’s groundbreaking Artificial Intelligence Act, which categorizes AI by risk level, banning those deemed “unacceptable” and imposing strict regulations on potentially dangerous forms. However, Congress has yet to enact any AI legislation, although three bipartisan-sponsored bills have been voted out of the Senate Rules committee. Unfortunately, none will likely be passed until after the November election.

Many states have stepped up to regulate the use of AI. The National Conference of State Legislators offers a comprehensive chart of the state actions and Ballotpedia provides an AI deep fake legislation tracker to help nonprofits monitor developments across all 50 states.

What Can Nonprofits Do About the Misuse of AI?

In the absence of government action, nonprofits can take a few measures to safeguard against the misuse of AI heading into the November election:

- Educate their communities about common risks such as how to spot AI and how to maintain personal security online. To take it a step further, translate these educational materials into other languages for wider reach and impact.

- Be a trusted and reliable source for accurate information about the election.

- Always verify. Use tools to screen for AI writing and identify false information.

- Learn more about AI and Elections

- AI and Democracy | Brennan Center for Justice

- Lobby state and federal officials to pass new law designed to combat the misuse of AI and the dissemination of false or misleading information. Nonprofits need to be mindful of their lobbying limits. Check out our resources for best practices.

- Find ways that AI can benefit nonprofits, such as improving organization and efficiency — it’s not all bad and scary!

Answer to AI photo quiz: The left image is AI generated, while the right is a real photo of Washington, DC.